Omnidirectional multi-view stereo matching and visual SLAM algorithms

Unique camera system with four ultra-wide field-of-view lenses

Applications to autonomous navigation of mobile robots and photorealistic 3D scene modeling

Professor Jongwoo Lim, from the Department of Mechanical Engineering, is dedicated to the development of camera-based 3D sensing algorithms and large-scale 3D reconstruction methods. His research primarily focuses on enhancing the fields of robotics and augmented/virtual reality (AR/VR) applications, with a particular emphasis on autonomous robot navigation, efficient 3D scene modeling, and accurate object monitoring within complex environments.

Contemporary autonomous driving solutions often heavily rely on expensive LiDAR sensors, which limit their widespread integration into everyday life. Even with this sensor configuration, persistent blind spots exist in close proximity to the vehicle, potentially leading to the oversight of obstacles or pedestrians. As a result, minimizing these blind spots and ensuring comprehensive monitoring of all directions around the vehicle have become crucial safety priorities.

In the context of distances measurement using camera technology, stereo algorithms have garnered significant attention. Traditional stereo algorithms primarily utilize conventional narrow field-of-view (FoV) images, posing challenges in capturing large areas in a single frame. To address this issue, Professor Lim's research explores the use of ultra-wide FoV fisheye lenses, capable of capturing over 200 degrees of FoV. However, the development of a novel algorithm is essential for multi-view stereo matching of images from such cameras, considering the complexities associated with computing consistent and accurate 360-degree omnidirectional depth measurements from heavily distorted fisheye images.

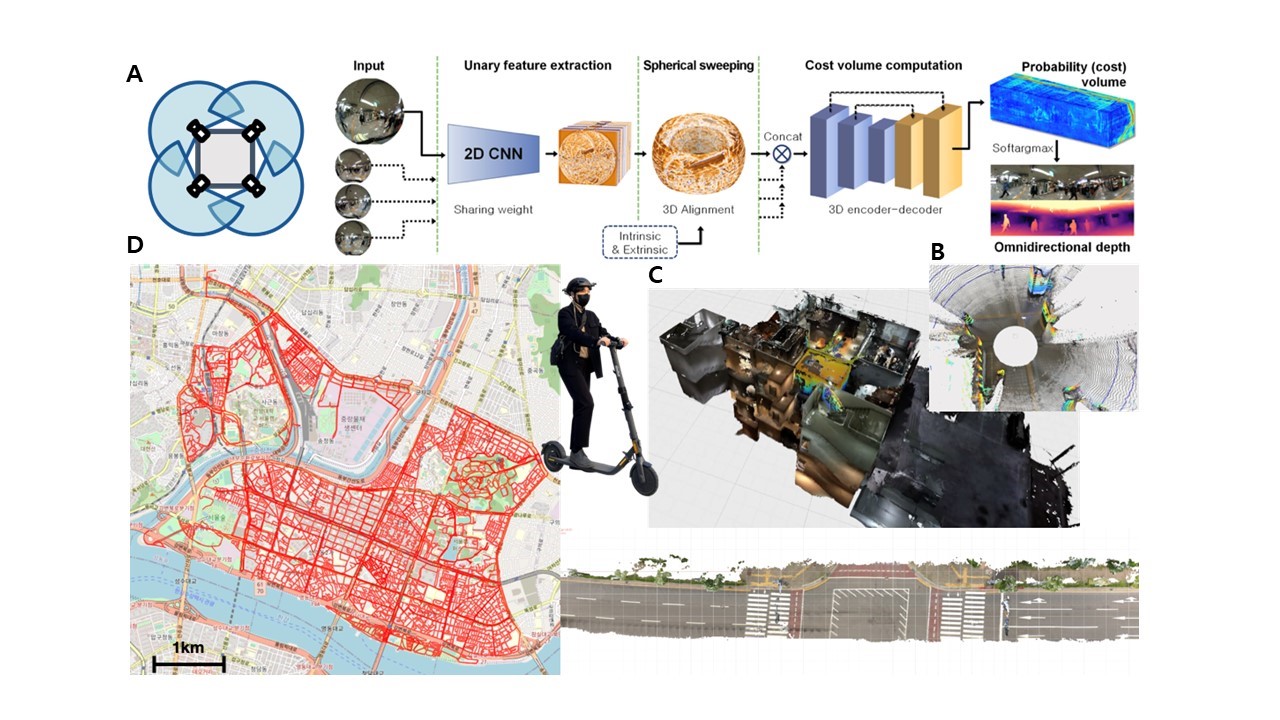

With a focus on omnidirectional depth and motion estimation algorithms using a minimal number of cameras, Professor Lim has devised a unique sensor configuration utilizing four ultra-wide FoV cameras, facilitating 360-degree omnidirectional sensing. This configuration ensures that all points within the sensing range are captured by at least two cameras, enabling depth computation through stereo matching. To mitigate distortions and facilitate seamless scene matching from different viewpoints, a deep neural network was trained using numerous synthetically generated training images. The resulting OmniMVS framework,[1, 2] based on the Spherical Sweeping method, effectively adapts to changes in camera configuration, maximizing the utilization of all visual features and structural information in the images. Notably, the network was rigorously tested with both synthetic and real test sequences, producing markedly clear and accurate results compared to previous methodologies.

Additionally, beyond distance measurement, estimating the vehicle's ego-motion is a crucial aspect for navigation planning and control. The aforementioned camera configuration proves to be highly suitable for computing ego-motion, even in challenging scenarios like heavily congested environments. By effectively tracking the static structures within the scene, the system ensures stable and accurate motion estimates. Through the integration of the ego-motion trajectory and the 360-degree depth maps, a comprehensive 3D mesh model of the world can be reconstructed. This innovative approach, known as the OmniSLAM algorithm,[3] earned recognition as a finalist for the Best Robot Vision paper at ICRA 2020.

Apart from his contributions to 3D sensing and motion estimation, Professor Lim has also made significant impact in the area of visual object tracking. His pioneering work includes the development of various visual tracking algorithms and the establishment of a benchmark for the fair evaluation of tracking performance in a wide range of real-world scenarios. This benchmark,[4] now a standard method for assessing tracking algorithms, has received the esteemed Longuet-Higgins Prize 2023; a 10-year test-of-time award presented by the computer vision foundation.

Building upon these achievements, Professor Lim co-founded a start-up company, MultiplEYE Co. Ltd., with two of his Ph.D. students who were involved in the OmniSLAM project. The company has successfully applied camera systems with ultra-wide FoV lenses to various practical applications, including wearable helmet capture devices, navigation sensors for mobile robots, and an HD-map capture sensor mounted on a car. Through their work,[5, 6] they have demonstrated the effectiveness of their sensor in mobile robot navigation and 3D environment modeling, emphasizing its user-friendly nature even for non-expert users. Furthermore, the captured data contains comprehensive visual and structural information about the environment, which they are leveraging to construct photorealistic 3D models using advanced neural rendering algorithms such as NeRFs or Gaussian Splatting.

Figure

From the top left corner in a clockwise direction: (A) Configuration of the wide-angle

camera sensor (B) The structure of the omniMVS deep neural network (C) A 3D model

using OmniSLAM – a 6-story building and a road (D) Scanning results for an area of about

15km^2 between Seongdong-gu and Gwangjin-gu.

References

1. C. Won, J. Ryu, J. Lim, “OmniMVS: End-to-End Learning for Omnidirectional Stereo Matching,” ICCV 2019

2. C. Won, J. Ryu, J. Lim, “End-to-End Learning for Omnidirectional Stereo Matching with Uncertainty Prior,” IEEE Trans. on Pattern Analysis and Machine Intelligence, 43 (11), 3850-3862, 2021

3. C. Won, H. Seok, Z. Cui, M. Pollefeys, J. Lim, “OmniSLAM: Omnidirectional Localization and Dense Mapping for Wide-Baseline Multi-Camera Systems,” ICRA 2020, 559--566

4. Y. Wu, J. Lim, M-H. Yang, “Online Object Tracking: A Benchmark,” CVPR 2013, 2411--2418

5. H. Seok, J. Lim, “ROVINS: Robust Omnidirectional Visual Inertial Navigation System,” IEEE Robotics and Automation Letters (RA-L), 5 (4), 6225--6232, 2020

6. S. Yang, J. Lim, “Online Extrinsic Correction of Multi-Camera Systems by Low-Dimensional Parameterization of Physical Deformation,” IROS 2022, 7944-7951

1. C. Won, J. Ryu, J. Lim, “OmniMVS: End-to-End Learning for Omnidirectional Stereo Matching,” ICCV 2019

2. C. Won, J. Ryu, J. Lim, “End-to-End Learning for Omnidirectional Stereo Matching with Uncertainty Prior,” IEEE Trans. on Pattern Analysis and Machine Intelligence, 43 (11), 3850-3862, 2021

3. C. Won, H. Seok, Z. Cui, M. Pollefeys, J. Lim, “OmniSLAM: Omnidirectional Localization and Dense Mapping for Wide-Baseline Multi-Camera Systems,” ICRA 2020, 559--566

4. Y. Wu, J. Lim, M-H. Yang, “Online Object Tracking: A Benchmark,” CVPR 2013, 2411--2418

5. H. Seok, J. Lim, “ROVINS: Robust Omnidirectional Visual Inertial Navigation System,” IEEE Robotics and Automation Letters (RA-L), 5 (4), 6225--6232, 2020

6. S. Yang, J. Lim, “Online Extrinsic Correction of Multi-Camera Systems by Low-Dimensional Parameterization of Physical Deformation,” IROS 2022, 7944-7951