Managing structural change for long-term mapping

Utilizing non-conventional sensors in extreme environments for spatial AI

Enhancing sensing with complementary sensor fusion

Professor Ayoung Kim, from the Department of Mechanical Engineering, leads the Robust Perception and Mobile Robotics Lab (RPM Robotics Lab). This laboratory carries out a range of research in spatial artificial intelligence (AI), which involves the utilization of sensors for environmental perception and the subsequent construction of scene understanding through mapping.

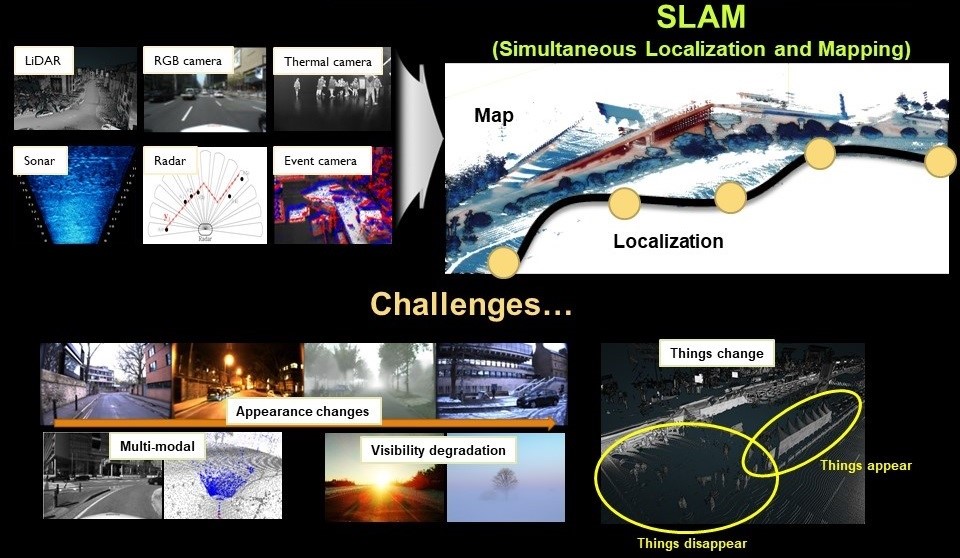

One essential technology in this field is Simultaneous Localization and Mapping, commonly known as SLAM. It allows robots and autonomous vehicles to create a map of an unknown environment while simultaneously determining their own location in real-time. As a robot or vehicle moves through different spaces, SLAM processes copious data from various sensors to map the terrain and understand the machine's position in relation to this newly mapped area. This dual functionality is what makes SLAM so crucial. In practical applications, it means that as an autonomous car drives down a street or a service robot navigates within a building, they are not only identifying and recording their surroundings but also constantly recalibrating their own position. This continual self-awareness and adaptability are what enable safe and efficient operations in dynamic and ever-changing environments.

Among the diverse applications, the RPM Robotics Lab primarily focuses on unstructured environments, such as construction sites. Unstructured environments, in the realm of robotics, are areas that lack predefined, predictable, or consistently organized features. These environments are known for their dynamic nature and frequent alterations, posing substantial challenges. Amidst its myriad applications, RPM Robotics Lab places notable emphasis on environments like construction sites. These sites are characterized by numerous moving objects and an environment that undergoes almost daily transformations, offering very few stable, man-made landmarks for reference. As the construction process unfolds, a significant portion of the site remains in this unstructured state. This fluidity and unpredictability make it particularly challenging for robots to navigate and operate, requiring advanced technology and adaptive algorithms for success.[1, 2]

The RPM Robotics Lab aims not merely to create maps but endeavors to master dynamic and ever-changing terrains. For a robot, mere mapping is insufficient; the ability to recognize, understand, and adapt to environmental shifts is imperative. Managing these comprehensive maps becomes a paramount task, yet it poses a challenge due to their potential expansion over time, making them unwieldy. Professor Kim's team has crafted an innovative solution to this predicament. Their algorithm focuses on the 'delta' or changes within the map. By harnessing the power of these delta maps, they have significantly streamlined map management, enhancing efficiency and enabling easy access to specific map instances.[3, 4]

In the domain of environmental perception studies, RGB cameras and Light Detection and Ranging (LiDAR) sensors reign supreme. However, these sensors exhibit a notable dependency on light, rendering them less effective in adverse conditions such as fog, heavy rain, and dust. To counteract this limitation, various solutions have been proposed, including the utilization of sensors operating on longer wavelengths. Sensors that function beyond the visible spectrum generally demonstrate greater resilience to the aforementioned extreme conditions. Despite their enticing benefits, employing such non-conventional sensors, like radars and thermal cameras, presents its own set of challenges, especially concerning their complex noise models.[5, 6]

In Prof. Kim's laboratories, extensive research has been conducted on these non-conventional sensors, spanning from fundamental understanding of sensor operation to practical applications. The RPM Robotics Lab has achieved noteworthy contributions in this specialized field, including facilitating robot navigation in dense indoor fog and obtaining accurate estimations during nighttime operations.[7, 8]

Lastly, this sensor-based navigation methodology can be extended to various robotic tasks, including manipulation, which is particularly crucial for robot grasping when a robot approaches an object for manipulate. The process can be extended to manipulators by interpreting the target objects as landmarks in robot navigation. The RPM Robotics Lab has successfully estimated the 6D poses of objects, even in challenging environments with varying lighting conditions and severe occlusion.[9, 10]

Figure

Localization and mapping module is essential for robot navigation. During the estimation,

many different sensors are adopted including LiDAR, cameras, thermal cameras, radars,

sonar, and event cameras.

References

1. G. Kim et al., Trans. On Robotics, 2022, 38(3), 1856-1874.

2. M. Jung et al., Robot and Auto Letter, 2023, 8(7), 4211-4218

3. G. Kim et al., ICRA, 2022.

4. G. Kim et al., IROS, 2020.

5. Y. Park et al., Robot and Auto Letter, 2021, 6(4), 7691-7698.

6. Y. Shin et al., Auto. Robots, 2020, 44(2), 115-130.

7. Y. Park et al., IROS, 2019.

8. Y. Shin et al., Robot and Auto Letter, 2019, 4(3), 2918-2925.

9. M. Jeon et al., Robot and Auto Letter, 2019, 5(3), 4955-4962.

10. M. Jeon et al., Robot and Auto Letter, 2022, 8(1), 137-144.

1. G. Kim et al., Trans. On Robotics, 2022, 38(3), 1856-1874.

2. M. Jung et al., Robot and Auto Letter, 2023, 8(7), 4211-4218

3. G. Kim et al., ICRA, 2022.

4. G. Kim et al., IROS, 2020.

5. Y. Park et al., Robot and Auto Letter, 2021, 6(4), 7691-7698.

6. Y. Shin et al., Auto. Robots, 2020, 44(2), 115-130.

7. Y. Park et al., IROS, 2019.

8. Y. Shin et al., Robot and Auto Letter, 2019, 4(3), 2918-2925.

9. M. Jeon et al., Robot and Auto Letter, 2019, 5(3), 4955-4962.

10. M. Jeon et al., Robot and Auto Letter, 2022, 8(1), 137-144.